Unscented Kalman Filter

Kalman Filter assumes that the data is in the form of Gaussian distribution and functions which are applied on it are linear in nature.

In real world, we have non-linear equations, because we may be predicting in one direction while our sensor is taking reading in some other direction, so it involves angles and sine cosine functions which are nonlinear.

If function is not linear, will the output Gaussian distributed? In the bottom right below, the probability distribution function for our state x is Gaussian distributed. We apply a function f on x, and the plot on the top left is the probability distribution function for f(x). As you can see, it is not Gaussian distributed anymore. Hence, if the function is not linear, our output will not be Gaussian distributed.

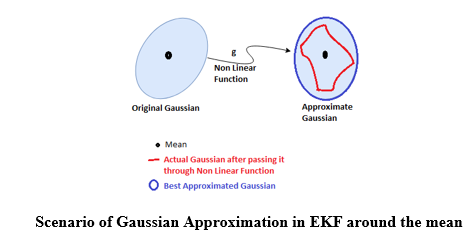

Extended Kalman Filter takes helps of Taylor Series (and Jacobian Matrix further) to linearly approximate a nonlinear function around the mean of the Gaussian and then predict the values

Extended Kalman Filter however uses only one point in the distribution (mean of the distribution) to linearize the non-linear function.

We have just one point to approximate the Gaussian. So, is there a better way to linearize?

Unscented Kalman Filter

Unscented Kalman Filter uses a bunch of points including the mean to approximate around those multiple points. Using all the data points in the distribution will take a lot of computational power and resources, so it may be the most trivial solution but it is not optimal. Further it can be very difficult to transform whole state distribution through a non-linear function but it is very easy to transform some individual points of the state distribution.

These points are known as the Sigma Points where some points on source Gaussian are selected and mapped on target Gaussian after passing points through some non-linear function. These sigma points are the representatives of whole distribution.

In addition, sigma points also have weights to give more or less preference to some points to make the approximation better

Unscented Transform

When a Gaussian is passed through a non-linear function, it does not remains a Gaussian anymore. Instead of linearizing our transformation function an approximation of the Gaussian from the resulting non-Gaussian distribution is done using a process called Unscented Transform.

To summarize here are the below steps the unscented transform performs:

1. Compute Set of Sigma Points

2. Assign Weights to each sigma point

3. Transform the points through non linear function

4. Compute Gaussian from weighted and transformed points

5. Compute Mean and Variance of the new Gaussian.

Basic Difference between EKF and UKF

Here the main difference from EKF is that in EKF we take only one point i.e. mean and approximate, but in UKF we take a bunch of points called sigma points and approximate with a fact that more the number of points, more precise our approximation will be!

In a case of nonlinear transformation EKF gives good results, and for highly nonlinear transformation it is better to use UKF.

EKF approximates a non-linear function to linear function using first order Taylor series expansion whereas the UKF approximates a non-Gaussian distribution to Gaussian distribution using weighted, unscented transformed sigma points.

Computing Sigma Points

The number of sigma points depend on the dimensionality of the system. The general formula is 2N + 1, where N denotes the dimensions.

χ(Caligraphic X) -> Sigma Points Matrix

μ -> mean of the Gaussian

n-> dimensionality of system

λ-> Scaling Factor

Σ-> Covariance Matrix

χ (Caligraphic X)

χ denotes the Sigma Point Matrix. An important thing to note here is that every column of χ denotes a set of sigma points. So if there are 2 dimensions, then the size of χ matrix will be 2 X 5 which means there are 5 number of sigma points for each dimension.

λ

λ is the scaling factor which tells how much far from mean sigma points ca be chosen. A good mathematical study suggests the optimal value of λ to be 3-n.

Obviously one of the sigma points is the mean, and the rest is calculated based on the above equations.

Computing Weights of Sigma Points

Calculating weight of the mean has a different equation than the rest of the sigma points. λ is the spreading parameter and n is the dimensionality. An interesting point to note here is that sum of all the weights is equal to 1.

Computing Mean and Covariance of the approximate Gaussian

The following equations are used to recover the Gaussian after it passes from the non-linear function g:

μ′ -> Predicted Mean

Σ′ -> Predicted Covariance

w -> Weights of sigma points

g -> Non Linear function

χ (Caligraphic X) -> Sigma Points Matrix

n -> Dimensionality

So this was all about the Unscented Transform and how it works.

Prediction Step

The Prediction Step is basically very close to what was discussed just now i.e. the Unscented Transform.

- Calculate Sigma Points

- Calculate Weights of Sigma Points

- Transforming Sigma Points and Calculate new Mean and Covariance- Whenever we predict, uncertainty increases by some amount, because system become a little bit uncertain so this uncertainty is accounted in the process noise.

Update Step

The procedure is quite similar to the one used in Kalman Filter. We take our state from our state space to measurement state space.

Now there are two options, the sigma points can be generated again because the predicted mean and variance changed and sigma points somehow depend on them or the same set of sigma points generated earlier, can be used again in the measurement space.

Z -> transformed sigma points in measurement space

χ(Caligraphic X) -> Sigma Points Matrix

ẑ -> Mean in measurement space

S -> Covariance in measurement space

Q-> Noise

h-> is a function that maps our sigma points to measurement space

Important: Z is the measurement space i.e. the measurement that is coming from the sensor. So a function h is used which can transform the state space to measurement space so that both can be equated in the same units.

Regarding the Kalman Gain, there is a bit of change here.

To calculate Error in Prediction: the cross-correlation between sigma points in state space and sigma points in the measurement space are calculated. The main difference between standard KF and UKF is the way Kalman gain K is calculated.

T -> Cross Co-relation Matrix between state space and predicted space

S-> Predicted Covariance Matrix

K-> Kalman Gain

Calculating final state

μ -> Mean

Σ -> Covariance

μ′ -> Predicted Mean

Σ′ -> Predicted Covariance

K -> Kalman Gain

z-> Actual Measurement Mean coming from the sensor

ẑ -> Mean in measurement space

T -> It is the same as H in Kalman Filter and Hⱼ in EKF. Here it is cross co-relation matrix